by gustaf | Jun 6, 2018

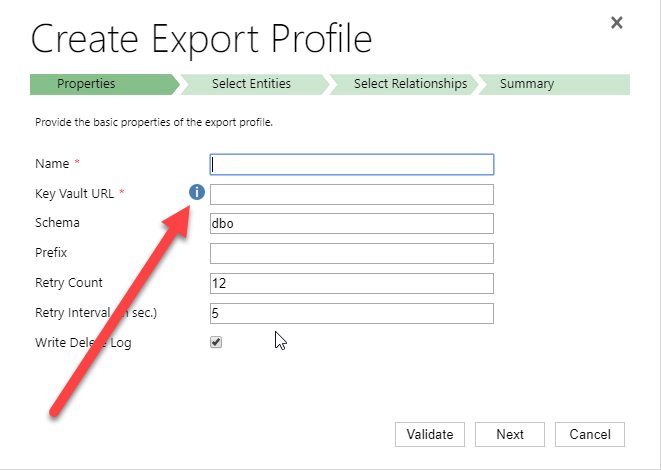

Setting up Dynamics 365 Data Export Service requires a Azure KeyVault to be set up which is typically done using a PowerShell script which can be found in the Data Export Service setup wizard. However, if you run into issues setting this up, it might be easier to do this directly in Azure by minimizing the steps of the scripts. This was a tip that my friend and Business Solution MVP Scott Durow recommended. He mentions this in his very instructive video, but doesn’t actually show how, so I thought I’d just detail how I made it work.

First some background. The reason why I even started investigating how to do this manually was that when I tried running the PowerShell script supplied by Microsoft in the wizard.

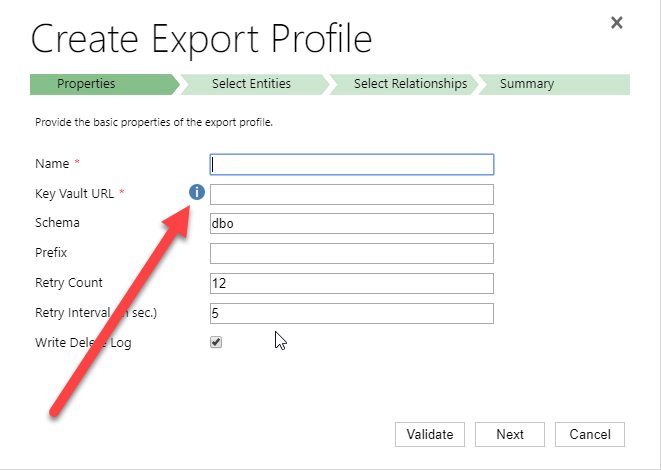

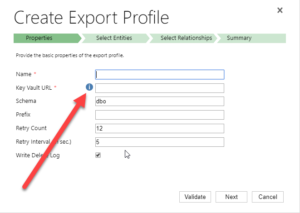

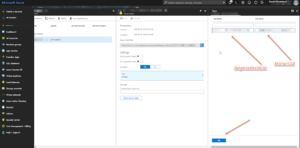

|

| Press the “i” icon to get a window containing the PowerShell Script that Microsoft recommends for setting up the Key Vault. |

When running the PowerShell script both as myself (not a global admin) and asking a global admin to do it, it failed in the latter parts. The key vault was created by some of the access policies seemed to be missing and it just didn’t work. My users rights in Azure was Contributor in the Resource Group, and it was a bit interesting cause the global admin and I got different error messages, but when I finally managed to create the key vault manually, I could do it all with my user, so it didn’t seem I was missing any rights to do it.

First step is to make sure you have all your data straight. The power shell script is good for this. Check out Scott clip if you want to know how to find the different strings. He shows it very clearly.

Just copied from the PS-Script:

$subscriptionId = '<subscription ID>'

$keyvaultName = 'MyVault'

$secretName = 'MySecretName'

$location = 'North Europe'

$connectionString = 'Server=tcp:<db-name>,1433;

Initial Catalog=<catalog>;

Persist Security Info=False;

User ID={your_username};

Password={your_password};

MultipleActiveResultSets=False;

Encrypt=True;TrustServerCertificate=False;Connection Timeout=30;'

$organizationIdList = '<DYN365GUID>'

$tenantId = ‘<AZURE TENANT ID>‘

The highlighted parts have to be replaced by your settings. I will use these variables to have something to reference to further in this article.

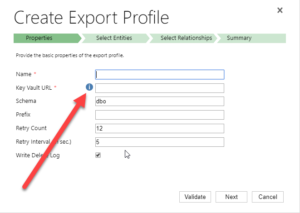

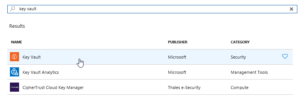

|

| Search for Key Vault and add the “Key vault”, the top one in this picture |

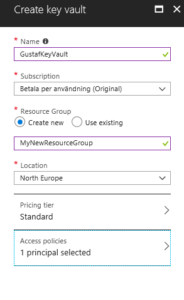

Then we have to set it up. Not so tricky if you have worked with Azure before. Consider if you want to work in an existing Resourcegroup or if you want to create a new one. Typically you need to have Azure SQL services running as well so it might be good to keep them all together to be able to see the costs and control who has access why a resource group might be a good idea. But that should hence already exist. If not, you can create it. I would recommend keeping Azure SQL and Key vault in the same, not sure if it actually works in different resource groups, probably does, but I haven’t tested.

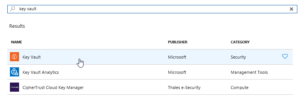

|

| Creating the key vault – in this case I am creating a new resource group, normally it would already exist |

Azure will add you as the default principal with access to the key vault. We will add Data Export Service to this later. For now, just create it.

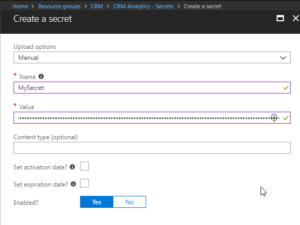

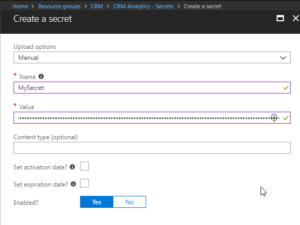

Now we need to open the Key vault and select the “Secrets” section in the menu on the left hand side and press the button:

“+ Generate/Import”

Then you have to enter you Secret name ($secretName) and the connection string ($connectionString) into the value.

|

| Creating a secret – $secretname in Name and $connectionstring in Value |

Press “Create”.

You should now return to the previous screen and see a row for your secret.

Select it.

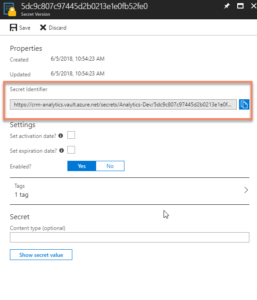

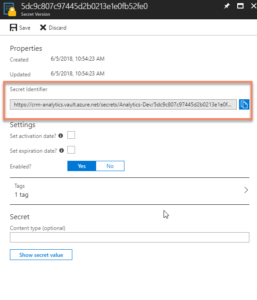

It should open the settings panel for the Secret, press the “Tags” part which is located in the middle and add a tag which has $OrgIdList ($organizationIdList) as the key and Tennant ($tenantId) as value. I have blurred them out below as they are rather private.

|

| Adding a tag with OrgIdList and tenantId to a Secret |

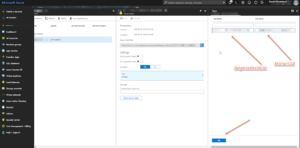

You then need to go back to the Key Vault and click on the “Access Policies” menu item, you should then see yourself as the principal as this was set when we created the key vault. We now need to add Data Export Service as a valid Principal with read access rights.

So click “Add”, click “Select Principal” and search for “b861dbcc-a7ef-4219-a005-0e4de4ea7dcf” which is the ID for Data Export Service. It should show up like this:

It needs to have “Secret Management Operations – GET” permissions and nothing else.

Now, go back to the Secret and copy the URI to the Secret.

|

| Getting the URI for the Key Vault Secret |

Paste it into the Data Export Service Wizard field for Key Vault.

Fill in the other information and press validate. Hopefully it will work out well!

Some issues

Being too cheap with the Azure SQL level

If you don’t go for a Azure SQL P1 and choose a lower tier, you might get this warning:

We tried an S0 for our Dev environment and tried to sync a couple of million records and that just didn’t work, we got tons of errors. We upgraded the ASQL to S2 and then at least we didn’t get any errors. We are planning for P1s in UAT and production.

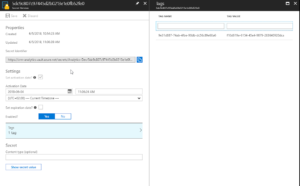

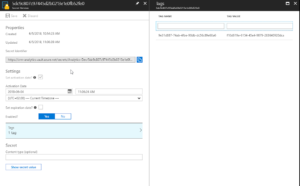

Might have to set activation date on secret

Seems that you might have to set an activation date on the secret. Not sure why this is, the PS-script doesn’t seem to do this. But not very hard.

|

| Added activation date on the Secret from June 4.th |

Using Database schema that is not created

The default database schema is “dbo” in the Data Export Service Wizard. If you change this to something else like “crm” and you haven’t created this in the database, you will get an error. It is simple to fix, you just have to go into the database and create the schema. To create the schema “crm” open a query and run:

CREATE SCHEMA crm

For more information on how to create schemas, check this site: https://docs.microsoft.com/en-us/sql/t-sql/statements/create-schema-transact-sql?view=sql-server-2017

Once the schema has been created, there should be no problem using it, as long as the user has permissions using it.

I hope this works for you. If you have any questions, don’t hesitate to leave a comment.

Gustaf Westerlund

MVP, Founder and Principal Consultant at CRM-konsulterna AB

www.crmkonsulterna.se

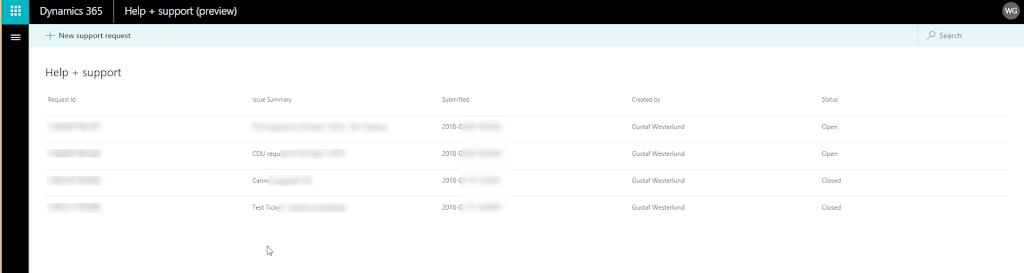

by gustaf | Apr 9, 2018

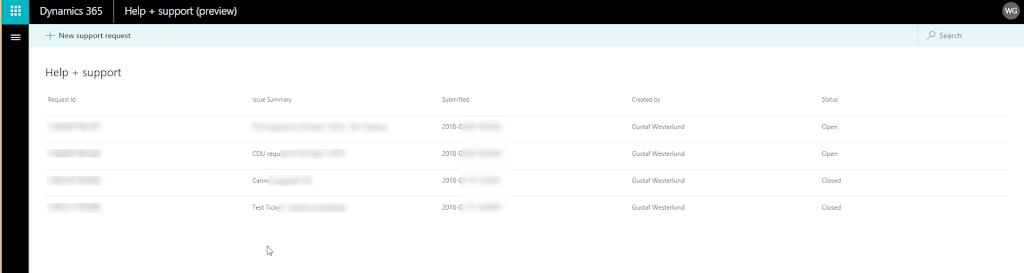

In case you haven’t seen it, the Dynamics 365 Admin Portal it is a great place to create and manage you Dynamics 365 tickets.

You get to it using this URL: https://admin.dynamics.com/

I haven’t found a link to the admin portal from the instance manger or any other place in O365 or Dyn365 yet so I have just created a bookmark for it, so I suggest you do the same.

Also, as I am a frequent Microsoft Support user, and often have customers with many instances, I have tried to consolidate many instances into one ticket. They don’t want that. Better to create one ticket for each instance. (probably gets someone a higher salary too :)) However, I did notice that there seems to be some duplicate detection going on, so try to avoid using the same “Issue Summary” for several tickets – as that will just make the later ones disappear without any error message (hint Microsoft, please fix this! – at least give a decent error message).

In order to create tickets, you need to be either O365 Global Admin or Dynamics 365 Service Administrator. There might be some other admin as well that has the right to create tickets, but I don’t think so.

Gustaf Westerlund

MVP, Founder and Principal Consultant at CRM-konsulterna AB

www.crmkonsulterna.se

by gustaf | Feb 25, 2018

Related to my last post, on working with the API quickly, Microsoft have now released official

documentation that they will, effectivly March the 19:th start limiting the number of API-calls per instance that is allowed to stop what is called “noisy neighbour” problems.

First of all, read the full article here: https://docs.microsoft.com/en-us/dynamics365/customer-engagement/developer/api-limits

Let’s break this down a bit, 60 000 calls per 5 minutes, translates to about 200 calls per second. If you break this, you will start getting exceptions, until the 5 minute period has ended. You are expected to back off, and essentialy handle this. That is the short version. Read the full article for more details.

Update: George Doubinski, a friend of mine and one of the brains of CRM Tip of the Day made me aware of the fact that the limit is per user. I will update the article below on what this means.

What does this mean? Is this a problem?

For most organizations, no, at least that I work with, I not even close to breaking this. If they are using some integration tools like Kingswaysoft or other tools which enable multithreaded integrations, but generally do not need that kind of data throughput then you might temporarily be shut down, but it should self heal after some time, as after each 5 minute time span, you will get another 60 000 requests. That could probably quite easily be fixed by checking the settings of the integration tool. Update: Also, if you integrate each system using a separate accont, you do not risk one system temporarily blocking many other systems from integrating to Dynamics 365. If you are using normal users, this will of course entail a certain license cost, why I generally recommend using app users for integrations, if possible. And after this, you should have one app user for each integrating system.

However, there are some organizations where I forsee issues, and these are organizations which have combinations of any of the following criteria:

- Third party products which, like Marketing Automation, (ClickDimensions, FreshRelevance, SalesForce Marketing Cloud) which have not had time, or got this in their scope yet, and have large amounts of data that they integrate into Dynamics 365. Update: Especially if the user they are using to integrate, the service user, is a normal user, either used by a normal user, or shared with integrations with other systems.

- Legacy Code that has been upgrade to new SDK but uses inefficient architecture – can for example have issues with using ExecuteMultiple which in the article above is described as the recommended best practice. Typically for the reason that the architecure of the code, would require major rewriting to allow for ExecuteMultiple. Update: In this case I strongly recommend looking at using a dedicated user for this specific integration, to isolate any limiations set on that user.

- Organizations with multiple heavy integrations to Dynamics 365. Will be hard to control that the sum total does not exceed 60k per second, and handle back-off in a controlled way. The only reasonable way would probably be to rewrite the integrations to use a proxy or queue instead like Azure Service Bus Queues to integrate and have a single integration interface. Probably a lot easier to write in a blog article than to do in real life. Update: This was an incorrection deduction from my part, as it is not based on the sum total, but on the sum per user, this is not a risk unless many integrations use the same user for integration which I do not recommend.

- Organizations with complex heavy integrations with thousands of lines of integration code that need to be redesigned, rewritten, tested and deployed before March 19:th. And there is no way to test it as there is no TAP/Beta program for this “Feature”. Update: This is still very relevant. Even such a small change as changing the integrating user for an external system should be thoroughly tested and for larger implementations that can be hard to do before March 19.

Example

I see is a typical B2C organization running Dynamics 365 with a marketing automation addon with email tracking and webtracking. They also have a very time critical integration of orders to be able to handle any incidents. Even if the order integration in itself does not reach the limits, it is not unforseeable that a mailblast, especially a good mail blast, to which many customers read the emails click the links, go their site, check their offers and start ordering, would cause a surge of traffic on the Marketing Automation integration – Dynamics 365 API. This of course depends on the settings of this, but perhaps it is critical that all events be tracked to Dynamics. With a mailblast to let’s say 1 Million recipients, quickly hitting the 60 k/5 min limit would happen. When this happens, this would also block all orders from going to Dynamics, causing an effective stop for working with any new incidents in the system.

Update: This is, of course, only relevant if both systems are integrating using the same user. Don’t. However, the marketing automation system above, would hit the limit fast anyway and if the supplier of this system didn’t have time to update their product/service then it would handle this incorrectly. I recommend checking integrating systems and try to turn down the verbosity of what they are writing to Dynamics 365. Then after March 18 when we see how this falls out in detail, you can test a more verbose setting in a test environment, and then see how that falls out.

Summary

For small and medium companies with low complexity working mainly with B2B. I don’t see that much of a problem. Larger companies with complex integrations, large databases, integrations to webtracking, email tracking which often will be B2C companies which have higher levels of automation and larger databases of customers, will probably have larger problems with this and need to start think about this right now.

We need to come back to this subject post March 19, to see how this will really work. But I think the real problem will be for the larger orgs with many and heavy integrations.

I would be really glad to hear your views on this like I got Georges’.

Gustaf Westerlund

MVP, Founder and Principal Consultant at CRM-konsulterna AB

www.crmkonsulterna.se

by gustaf | Feb 8, 2018

A quick one today…

Needed to delete a couple of million records for a customer and the natural thing was to use the Bulk Deletion service, well, I turned it on and it was extremely slow. Only got about 10 records/s which would cause the entire delete to take over a week. I have checked with Microsoft and this is not a bug, but it is working as designed and is not designed to be super fast. According to Microsoft bulk deletion jobs are put on the async queue on low priority to allow other more important jobs higher prio.

And a favorite quote of mine from Purvin Patel of Microsoft “Does a dump truck need to outrace a Ferrari?” – and I think that the answer to that question is: it depends. Sometimes it does.

Personally I would sometimes like it to be as fast as possible when removing a lot of records.

I also checked to see how fast the deletion would be with SSIS and Kingswaysoft. Used the following settings:

- VM about 5 ms from the Dynamics 365 instance (important that it not be too far, use an Azure VM for this)

- Used 64 threads

- Used Execute Multiple batching with 10 (cannot use more that 10 if you are using a lot of threads, ie more than 2)

- VM has 8 virtual cores and 32 GB memory

- Loading in batches of 2000. Only loading the id-column, as that is all that is needed.

With this setup, I got somewhere around 345 records deleted per second. Which is a tad more than 34x faster than the bulk delete.

So, want to delete a lot of stuff, maybe Bulk Delete is not the way to go. Not yet anyway, let’s hope Microsoft makes it faster!

(this post was updated on Feb the 9:th 2018)

Gustaf Westerlund

MVP, Founder and Principal Consultant at CRM-konsulterna AB

www.crmkonsulterna.se

by gustaf | Feb 4, 2018

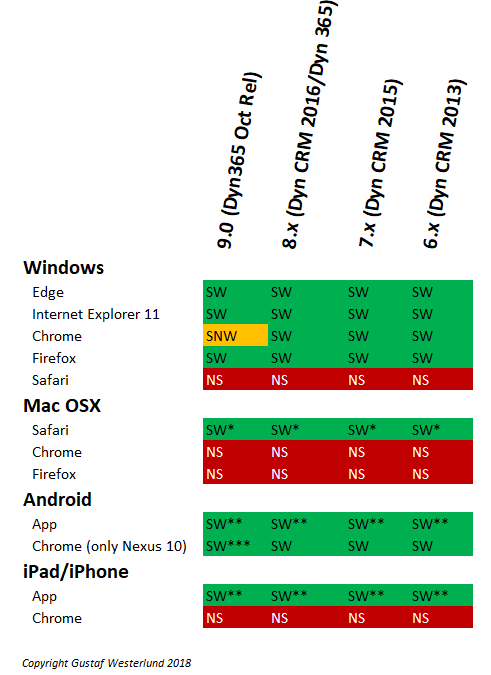

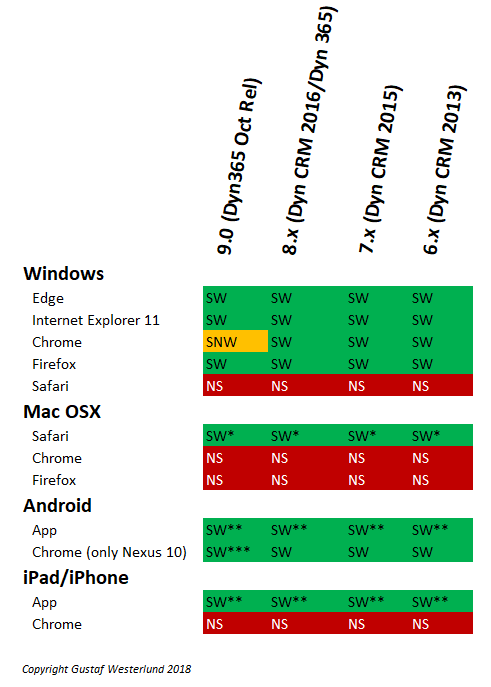

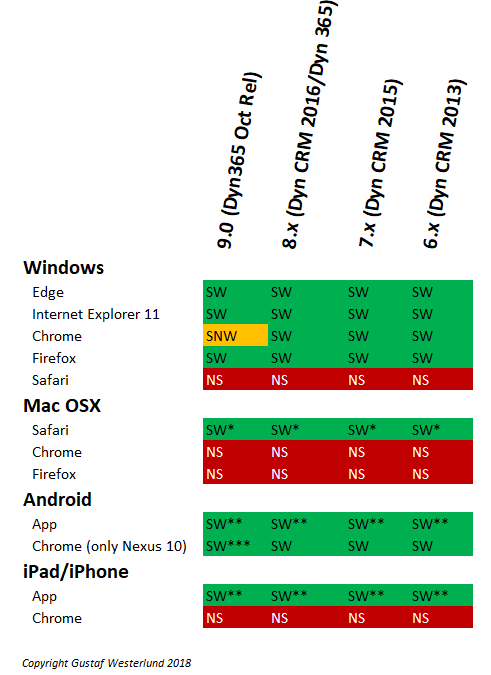

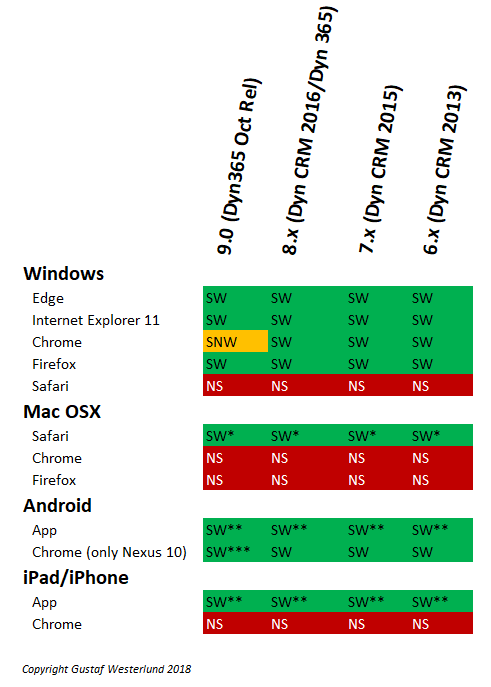

One of my most popular posts is a collation on which browsers are actually supported for Dynamics 365 CE. This is not strange since it is a common issue, we often have customers call our support complaining that Dynamics doesn’t work well, and as described in the original article, the cause is usually that they are using an unsupported browser.

Also the Micrsoft Technet article for this: https://docs.microsoft.com/en-us/dynamics365/customer-engagement/admin/supported-web-browsers-and-mobile-devices

and the detailed version: https://technet.microsoft.com/en-us/library/hh699710.aspx mainly focuses on Edge and Internet Explorer compatibility for the different windows versions. And despite Microsoft’s hopes, the rest of the world have not totally turned to all of their programs, yet.

There are currently some issues with version 9.0 as well, why I thought it might be a good idea to write a new article on which browsers are the supported one in a easily overviewable grid.

A brief explanation to the different abbreviations used in the picture:

- SW – Supported and Working.

- SW* – Supported and Working on OS X as designed but with somewhat limited functionality. If you compare Dynamics on OS X running Safari with it running Edge on a Win 10 you will notice that there are more options.

- SW** – The app is not the same thing as the browser at the moment. The Unified Interface will make them almost the same. There will still be differences, in that the app will have better integrations to the device’s hardware, like camera, etc. Especially for the earlier versions, the app was very limited. It is more powerful know. For field service there is also a special Field Service App which is not as HTML/JS based (it is based on Resco).

- SW*** – I have not had the chance to test if version 9.0 works on a Nexus 10 running Chrome but the official documentation says it should work. However, as v.9.0 does not work with Chrome at the moment, (this will probably be fixed very soon) I would actually be surprised if it worked for Chrome on Nexus 10.

- NS – Not supported. This does not mean, however, that you cannot get it to work. I have heard of people that mange to get Chrome on OS X to go into some “PC” mode which tricks Dynamics. This still doesn’t make it supported, but it might work for you. The problem might be if you have any issues, that Microsoft Support could leave you hanging.

- SNW – Supported but Not Working – As for Chrome on Windows, the general story is that Microsoft only support the latest version of it. However, despite version 9.0 official supporting Chrome on Windows, it doesn’t seem to actually work. I would presume that they will fix this soon. The problem is that Dynamics 365 won’t actually load.

The support matrix for the browsers is generally a tricky question. The matrix above is also simplified a bit as I have not included the parameter of the different versions of Operating systems. It can probably be presumed that Microsoft will make greater efforts into making sure that the latest and possibly even some versions before that, that they know customers are using for Edge and Internet Explorer, work well with Dynamics 365. I know some of you will probably think I am crazy for even mentioning Internet Explorer, but I have several customers who still have IE as the company standard browser, and it is not due to Dynamics 365.

As for Firefox, Chrome and Safari, these browsers might be very popular, but from a Microsoft perspective I can see a very tricky situation, and that is that they roll out changes without notifying anyone. This was, for instance, done by Google a couple of years ago when they changed their handling of modal dialogs. This caused many dialog handling features of Dynamics CRM (at that time) to break. It took some time for Microsoft to identify the change, fix it, make sure there were no regression errors, roll it through all testing scenarios and deployment stages and controlls they do until they were able to get a fix out. It did come out quite fast, but there were some people nagging at Microsoft for having a bad product, which I personally find a bit unfair.

The essence of the two paragraphs above is; feel free to use Chrome, Safari or Firefox but I would suggest always having Edge and/or IE as a backup option in the case that an update comes to the main browser that severely affects the usage of Dynamics 365.

And I hope that Microsoft soon fixes the error that stops us from using Chrome with ver. 9.0. Any day now!

Gustaf Westerlund

MVP, Founder and Principal Consultant at CRM-konsulterna AB

www.crmkonsulterna.se

Recent Comments