Large migration – lessons learned

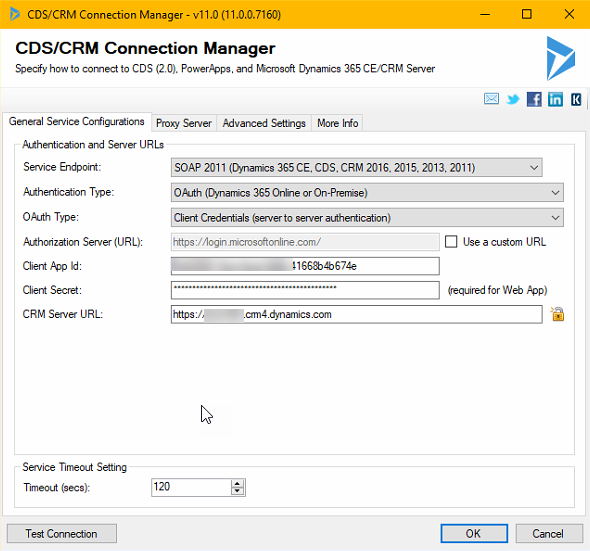

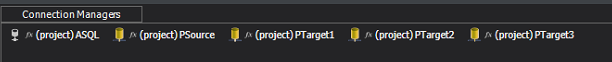

I was recently in charge of a large migration. It all went fine but not without hickups that typically are connected to moving large amounts of data to dataverse. We were using SSIS with Kingswaysoft and ended up using a local SQL database as staging database too. This article will discuss the different lessons learned and give some concrete tips when doing similar migrations.

One of my more popular articles is the article that describes how to optimize the writing of data to Dataverse/CDS. If you are working with migration of large amounts of data, as I will be describing here, I do suggest you have a look at it: https://powerplatform.se/fast-data-management-in-a-limited-cds-world/. I will not discuss those concepts in any detail here but we did use all aspects mentioned in that article.

I recently was in charge of a migration which used CSV-file exports from an old German system (with German field names!) which had many millions of records, in both large tables like “Contact” and “Sales Order”. However, the system we migrated from had a completely different data modell than the one used in Dynamics. For instance, each row describing a “Flight” had to be divided into two rows, one for the outgoing flight and one for the homecoming flight, in the order detail table. We also had to create a lot of related data which was referenced from the “Flight” table, for example location, agent and brand. In other words, there was quite a lot of heavy transformations going on and a lot of logic involved, such as change format on the old data to match the Dataverse model and apply rules to resolve old issues, such as bugs.

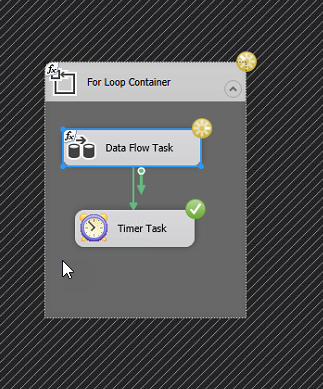

Initially we only got a quite small subset of the entire database load, and we started our migration journey by creating all the migration logic in SSIS (which facilitates the script and makes updates easy to handle). The script did include some functions that “joined” rather large tables, both from the CSV files but also related data fetched from the Dataverse based on primary and alternate keys. I was clear with the customer from the very beginning that I wanted a full export with the same amount of data that we could expect in the final migration, mainly for the opportunity to stress-test the SSIS script before the migration to the production environment took place and after a while we got the big files…

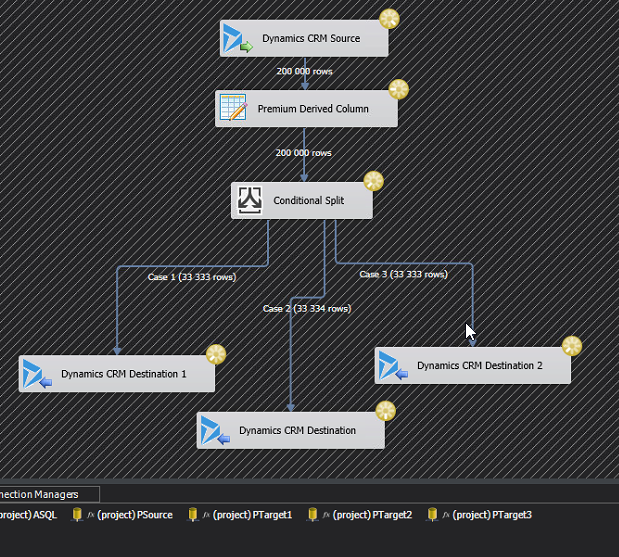

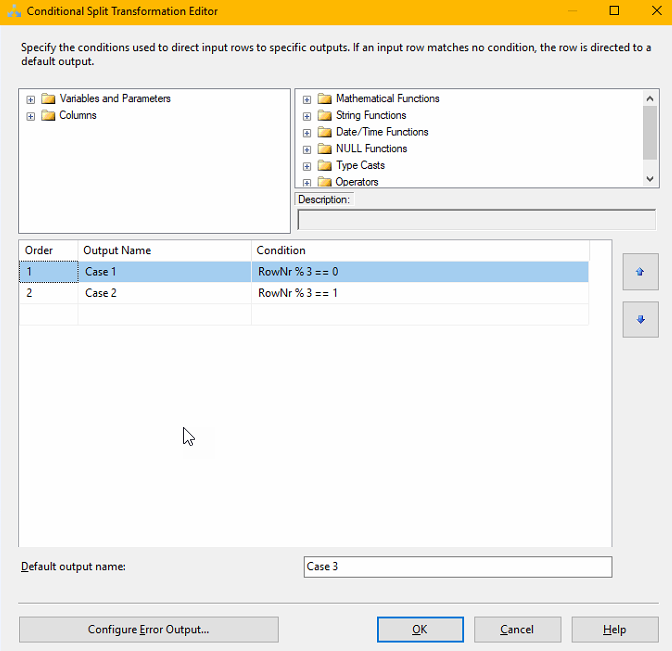

…And this was when the excrement hit the wind generator. The afore mentioned lookups just stalled forever. We noted that having a lookup (using Kingswaysoft Premium Lookup) works fine on a computer with 16 GB memory up to a few 100k of records. However, once the data starts reaching 500k and more, it just stalls forever (and don’t even get me start on the sort tools…). Not sure exactly if it would have been possible to fix this by adding more cores and memory, we didn’t try. We hence had to rewrite the script and implement a staging database instead. What we found, is that a dataflow with 1M+ records of lookups will be 100x faster if you import the data into SQL and do a join instead. Lookups still works for smaller tables and I am not against them per se, as they do make the migration simpler. Adding more tables to a migration database will increase complexity, and if you want to add a column in a table, that table do not only has to be added to one SSIS dataflow, but probably a few more. And you also must do an ALTER TABLE in SQL to add the field there too. It is therefore important to have a good mapping set before you start to create the script. And keep the complexity as simple as possible. You can also use SQL tasks in the migration script to update the tables straight after you read them to the staging database per automation, if you need to apply some kind of rules after the read to the staging database, and find it easiest with an SQL query.

The method we used for developing the migration was to first make a “skeleton” migration, based on the target data model. In other words, we started with trying to get a few of the easiest fields, not all, from all tables that was to be involved in the migration – maybe it could be called – model-first-approach, instead of starting with one table, completing this and then moving on to the next. The advantage of the model-first-approach is that you quite early can start some tests on the data, for instance setting up some quantitative test by checking in the source system for the quantities of contacts and then comparing these quantities to the target. The tests can typically be done by other people than the people building the migration scripts and hence this methods scales a lot better than table-by-table-approach. It is also possible for several devs to work in parallell with different tasks. Typically the more senior will build the skeleton and then more junior can add fields by field to each respective table. A negative aspect of this approach is that it requires a lot of re-loads (keep in mind that this was a first migration, so there are no prior data in the Dataverse that we needed to consider) and re-mapping. And it may be easier to “fall out of” the structure, if you just need “to add a little bit here and there”. It is however indeed hard to go table for table, especially with related data. If you already have a lot of live data, you should think about a way to easy identify the migrated data so you can bulk-deleted. And do not forget to engage the client early with raised questions and the mapping to make sure you have understood everything correctly and avoid unnecessary errors.

We also tried to create unique row identities that strictly was based on the source data. This is very useful as that allows for delta-migration, or to continue where we left off in case of a problem. Let’s say for instance that you want to migrate 3 million contacts. If, after 2.1 Million contacts the script breaks for some reason, it is good to be able to continue at 2.1M instead of restarting. In this case we didn’t use modifiedon-date to be able to do a full delta migration logic but it is certainly possible. For this we used the cache-transforms, easily fetch the already migrated data (if any) with the unique and sort out the already migrated data if it matched the key.

Another pattern that we used was that, after creating a specific record, like contact, we reimported the recordid (in this case contactid) together with the legacyid. This allowed us to directly join with this table when later adding tables with dependencies like lookups towards the contact table, could be joined with this mapping table so that we directly got the contactid when querying the related table.

Tips

- When migrating from CSV, import them directly as source tables in the staging database. That way, in case you need to fix something, you have a good reference for quantities.

- Get an example of the full data load as early as possible. A script that works for a subset might not work at all for the full dataload as was the case for us.

- Automate as much as possible. Don’t use any hardcoded values that are environent specific, such as transactioncurrencyid, but rather read these to small tables or to SSIS variables. Use SQL Truncate to remove all data quickly in a table, and make this part of the SSIS script as an SQL task at the appropriate stage.

- Always check the quantities. How many rows in source data, how many rows after a match and check if it differs so you very early can identify bugs in your script that might be the reason for dropping rows. For example, you might use a JOIN when you should use an OUTER JOIN. Always check the total number and see if it is what you expect. Watch out for duplicates, and always check so your unique IDs (if you got some from the source data) really are unique and not NULL. Do note that if you have duplicates, that you join on, that will create multiplications. Hence it is possible, after a select-statement with joins to get more records that the initial table.

- Define reasonable goals and test cases for the migration. Some examples:

- 99.9% of all contacts to be migrated correctly. With 1 M records, this means that anything lower than 1000 incorrect migrated contacts/missed, is defined as still ok.

- Randomly pick 10-20 records on a base level, like 20 customer, and then compare these in the UAT/Test environment to the source system, as it is seen there. This needs to be done by the business people, so that they can have a say if the migrated data is fine.

- Select some filters, like “all customers in Munich” and some other segmentations and compare source system to destination. If there are large amounts of errors, backtrack to the staging database to see where you did loose some records or created too many (not uncommon).

- Complete entire transformation to destination tables in the staging db. Then you can move directly from there to dataverse. This is particularly important when moving large quantities of data when managing the data in SSIS can be problematic.

- Make sure to have unique identifiers on all tables that preferably can be regenerated from the data. Store these in some “Legacy ID” field. This allows for delta-migration logic, ie. where part of the data is migrated and then the rest later. If you have some issues during one of the dataflows, and it stops on 3 230 234-th record of 6 M, you can continue from there and you don’t have to redo it all. If there is no decent way of getting a legacy id, you can generate classic row numbers by creating an identity column. This will make the migration utilize this, but only within that particular instance and load of the staging db. Hence you must be careful everytime you reload the database.

- Utilize the backup-restore functionality of the dataverse environments. Do note that you can make manual backups just before you start migration. If you have a production environment, this will need to be converted to a sandbox environment before you can restore to it. Another option I got from a colleague was to use 3 different environments, with temporary names, and then just rename the final one when done.

- Once you have transfered an entire table to the source system, it is typically very useful to have a mapping table, with just the table record id and the legacy id. So for instance, after migrating Contact, read all contacts from dataverse with the contactid and the legacy id. That way, when later migrating “salesorders”, which identify the customer by legacy id, it is easy to just join with this table to get the contactid.

- Production environments are faster. Fastest is to ask Microsoft Support to relax throttles on all environments that are used during migration.

- Use a VM that is located geographically (or really with low latency and high throughput) to where the environments are hosted. This is a very common recommendation by Kingswaysoft too.

- The settings for number of threads and batch size needs to be set based on some factors, namely:

- Production/Sandbox

- Have throttles been relaxed

- Size of payload (ie how many columns) – larger payload -> smaller batches.

- Type of action – creates are faster than deletes. Updates are in-between.

I hope these tips can help you along. If you have any comments or you have other experiences in this subject, don’t hesitate to leave a comment.

During this migration and the writing of this article, I had excellent help from my highly intelligent colleague Ebba Linnea Nilsson and it is certainly true that two heads are better than one, and the end result is often a lot better than just the sum of two people. So for my final recommendation, make sure to have a good colleague with you to help you out, as you most probably will run into some issues and having someone to discuss with is really great!

Good luck on your migration challenge!

Recent Comments